Neurolinguistics

Neurolinguistics is the study of neural mechanisms in the human brain that control the comprehension, production, and acquisition of language. As an interdisciplinary field, neurolinguistics draws methods and theories from fields such as neuroscience, linguistics, cognitive science, communication disorders and neuropsychology. Researchers are drawn to the field from a variety of backgrounds, bringing along a variety of experimental techniques as well as widely varying theoretical perspectives. Much work in neurolinguistics is informed by models in psycholinguistics and theoretical linguistics, and is focused on investigating how the brain can implement the processes that theoretical and psycholinguistics propose are necessary in producing and comprehending language. Neurolinguists study the physiological mechanisms by which the brain processes information related to language, and evaluate linguistic and psycholinguistic theories, using aphasiology, brain imaging, electrophysiology, and computer modeling.[1]

History

[edit]

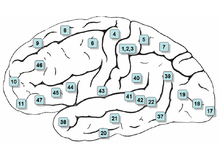

Neurolinguistics is historically rooted in the development in the 19th century of aphasiology, the study of linguistic deficits (aphasias) occurring as the result of brain damage.[2] Aphasiology attempts to correlate structure to function by analyzing the effect of brain injuries on language processing.[3] One of the first people to draw a connection between a particular brain area and language processing was Paul Broca,[2] a French surgeon who conducted autopsies on numerous individuals who had speaking deficiencies, and found that most of them had brain damage (or lesions) on the left frontal lobe, in an area now known as Broca's area. Phrenologists had made the claim in the early 19th century that different brain regions carried out different functions and that language was mostly controlled by the frontal regions of the brain, but Broca's research was possibly the first to offer empirical evidence for such a relationship,[4][5] and has been described as "epoch-making"[6] and "pivotal"[4] to the fields of neurolinguistics and cognitive science. Later, Carl Wernicke, after whom Wernicke's area is named, proposed that different areas of the brain were specialized for different linguistic tasks, with Broca's area handling the motor production of speech, and Wernicke's area handling auditory speech comprehension.[2][3] The work of Broca and Wernicke established the field of aphasiology and the idea that language can be studied through examining physical characteristics of the brain.[5] Early work in aphasiology also benefited from the early twentieth-century work of Korbinian Brodmann, who "mapped" the surface of the brain, dividing it up into numbered areas based on each area's cytoarchitecture (cell structure) and function;[7] these areas, known as Brodmann areas, are still widely used in neuroscience today.[8]

The coining of the term neurolinguistics in the late 1940s and 1950s is attributed to Edith Crowell Trager, Henri Hecaen and Alexandr Luria. Luria's 1976 book "Basic Problems of Neurolinguistics" is likely the first book with "neurolinguistics" in the title. Harry Whitaker popularized neurolinguistics in the United States in the 1970s, founding the journal "Brain and Language" in 1974.[9]

Although aphasiology is the historical core of neurolinguistics, in recent years the field has broadened considerably, thanks in part to the emergence of new brain imaging technologies (such as PET and fMRI) and time-sensitive electrophysiological techniques (EEG and MEG), which can highlight patterns of brain activation as people engage in various language tasks.[2][10][11] Electrophysiological techniques, in particular, emerged as a viable method for the study of language in 1980 with the discovery of the N400, a brain response shown to be sensitive to semantic issues in language comprehension.[12][13] The N400 was the first language-relevant event-related potential to be identified, and since its discovery EEG and MEG have become increasingly widely used for conducting language research.[14]

Discipline

[edit]| Part of a series on |

| Linguistics |

|---|

|

|

Interaction with other fields

[edit]Neurolinguistics is closely related to the field of psycholinguistics, which seeks to elucidate the cognitive mechanisms of language by employing the traditional techniques of experimental psychology. Today, psycholinguistic and neurolinguistic theories often inform one another, and there is much collaboration between the two fields.[13][15]

Much work in neurolinguistics involves testing and evaluating theories put forth by psycholinguists and theoretical linguists. In general, theoretical linguists propose models to explain the structure of language and how language information is organized, psycholinguists propose models and algorithms to explain how language information is processed in the mind, and neurolinguists analyze brain activity to infer how biological structures (populations and networks of neurons) carry out those psycholinguistic processing algorithms.[16] For example, experiments in sentence processing have used the ELAN, N400, and P600 brain responses to examine how physiological brain responses reflect the different predictions of sentence processing models put forth by psycholinguists, such as Janet Fodor and Lyn Frazier's "serial" model,[17] and Theo Vosse and Gerard Kempen's "unification model".[15] Neurolinguists can also make new predictions about the structure and organization of language based on insights about the physiology of the brain, by "generalizing from the knowledge of neurological structures to language structure".[18]

Neurolinguistics research is carried out in all the major areas of linguistics; the main linguistic subfields, and how neurolinguistics addresses them, are given in the table below.

| Subfield | Description | Research questions in neurolinguistics |

|---|---|---|

| Phonetics | the study of speech sounds | how the brain extracts speech sounds from an acoustic signal, how the brain separates speech sounds from background noise |

| Phonology | the study of how sounds are organized in a language | how the phonological system of a particular language is represented in the brain |

| Morphology and lexicology | the study of how words are structured and stored in the mental lexicon | how the brain stores and accesses words that a person knows |

| Syntax | the study of how multiple-word utterances are constructed | how the brain combines words into constituents and sentences; how structural and semantic information is used in understanding sentences |

| Semantics | the study of how meaning is encoded in language |

Topics considered

[edit]Neurolinguistics research investigates several topics, including where language information is processed, how language processing unfolds over time, how brain structures are related to language acquisition and learning, and how neurophysiology can contribute to speech and language pathology.

Localizations of language processes

[edit]Much work in neurolinguistics has, like Broca's and Wernicke's early studies, investigated the locations of specific language "modules" within the brain. Research questions include what course language information follows through the brain as it is processed,[19] whether or not particular areas specialize in processing particular sorts of information,[20] how different brain regions interact with one another in language processing,[21] and how the locations of brain activation differ when a subject is producing or perceiving a language other than his or her first language.[22][23][24]

Time course of language processes

[edit]Another area of neurolinguistics literature involves the use of electrophysiological techniques to analyze the rapid processing of language in time.[2] The temporal ordering of specific patterns of brain activity may reflect discrete computational processes that the brain undergoes during language processing; for example, one neurolinguistic theory of sentence parsing proposes that three brain responses (the ELAN, N400, and P600) are products of three different steps in syntactic and semantic processing.[25]

Language acquisition

[edit]Another topic is the relationship between brain structures and language acquisition.[26] Research in first language acquisition has already established that infants from all linguistic environments go through similar and predictable stages (such as babbling), and some neurolinguistics research attempts to find correlations between stages of language development and stages of brain development,[27] while other research investigates the physical changes (known as neuroplasticity) that the brain undergoes during second language acquisition, when adults learn a new language.[28] Neuroplasticity is observed when both Second Language acquisition and Language Learning experience are induced, the result of this language exposure concludes that an increase of gray and white matter could be found in children, young adults and the elderly.[29]

Language pathology

[edit]Neurolinguistic techniques are also used to study disorders and breakdowns in language, such as aphasia and dyslexia, and how they relate to physical characteristics of the brain.[23][27]

Technology used

[edit]Since one of the focuses of this field is the testing of linguistic and psycholinguistic models, the technology used for experiments is highly relevant to the study of neurolinguistics. Modern brain imaging techniques have contributed greatly to a growing understanding of the anatomical organization of linguistic functions.[2][23] Brain imaging methods used in neurolinguistics may be classified into hemodynamic methods, electrophysiological methods, and methods that stimulate the cortex directly.

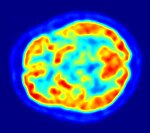

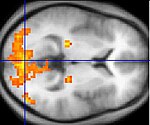

Hemodynamic

[edit]Hemodynamic techniques take advantage of the fact that when an area of the brain works at a task, blood is sent to supply that area with oxygen (in what is known as the Blood Oxygen Level-Dependent, or BOLD, response).[30] Such techniques include PET and fMRI. These techniques provide high spatial resolution, allowing researchers to pinpoint the location of activity within the brain;[2] temporal resolution (or information about the timing of brain activity), on the other hand, is poor, since the BOLD response happens much more slowly than language processing.[11][31] In addition to demonstrating which parts of the brain may subserve specific language tasks or computations,[20][25] hemodynamic methods have also been used to demonstrate how the structure of the brain's language architecture and the distribution of language-related activation may change over time, as a function of linguistic exposure.[22][28]

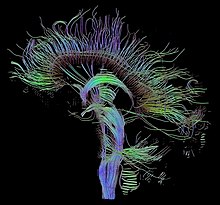

In addition to PET and fMRI, which show which areas of the brain are activated by certain tasks, researchers also use diffusion tensor imaging (DTI), which shows the neural pathways that connect different brain areas,[32] thus providing insight into how different areas interact. Functional near-infrared spectroscopy (fNIRS) is another hemodynamic method used in language tasks.[33]

Electrophysiological

[edit]

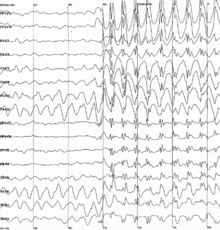

Electrophysiological techniques take advantage of the fact that when a group of neurons in the brain fire together, they create an electric dipole or current. The technique of EEG measures this electric current using sensors on the scalp, while MEG measures the magnetic fields that are generated by these currents.[34] In addition to these non-invasive methods, electrocorticography has also been used to study language processing. These techniques are able to measure brain activity from one millisecond to the next, providing excellent temporal resolution, which is important in studying processes that take place as quickly as language comprehension and production.[34] On the other hand, the location of brain activity can be difficult to identify in EEG;[31][35] consequently, this technique is used primarily to how language processes are carried out, rather than where. Research using EEG and MEG generally focuses on event-related potentials (ERPs),[31] which are distinct brain responses (generally realized as negative or positive peaks on a graph of neural activity) elicited in response to a particular stimulus. Studies using ERP may focus on each ERP's latency (how long after the stimulus the ERP begins or peaks), amplitude (how high or low the peak is), or topography (where on the scalp the ERP response is picked up by sensors).[36] Some important and common ERP components include the N400 (a negativity occurring at a latency of about 400 milliseconds),[31] the mismatch negativity,[37] the early left anterior negativity (a negativity occurring at an early latency and a front-left topography),[38] the P600,[14][39] and the lateralized readiness potential.[40]

Experimental design

[edit]Experimental techniques

[edit]Neurolinguists employ a variety of experimental techniques in order to use brain imaging to draw conclusions about how language is represented and processed in the brain. These techniques include the subtraction paradigm, mismatch design, violation-based studies, various forms of priming, and direct stimulation of the brain.

Subtraction

[edit]Many language studies, particularly in fMRI, use the subtraction paradigm,[41] in which brain activation in a task thought to involve some aspect of language processing is compared against activation in a baseline task thought to involve similar non-linguistic processes but not to involve the linguistic process. For example, activations while participants read words may be compared to baseline activations while participants read strings of random letters (in attempt to isolate activation related to lexical processing—the processing of real words), or activations while participants read syntactically complex sentences may be compared to baseline activations while participants read simpler sentences.

Mismatch paradigm

[edit]The mismatch negativity (MMN) is a rigorously documented ERP component frequently used in neurolinguistic experiments.[37][42] It is an electrophysiological response that occurs in the brain when a subject hears a "deviant" stimulus in a set of perceptually identical "standards" (as in the sequence s s s s s s s d d s s s s s s d s s s s s d).[43][44] Since the MMN is elicited only in response to a rare "oddball" stimulus in a set of other stimuli that are perceived to be the same, it has been used to test how speakers perceive sounds and organize stimuli categorically.[45][46] For example, a landmark study by Colin Phillips and colleagues used the mismatch negativity as evidence that subjects, when presented with a series of speech sounds with acoustic parameters, perceived all the sounds as either /t/ or /d/ in spite of the acoustic variability, suggesting that the human brain has representations of abstract phonemes—in other words, the subjects were "hearing" not the specific acoustic features, but only the abstract phonemes.[43] In addition, the mismatch negativity has been used to study syntactic processing and the recognition of word category.[37][42][47]

Violation-based

[edit]

Many studies in neurolinguistics take advantage of anomalies or violations of syntactic or semantic rules in experimental stimuli, and analyzing the brain responses elicited when a subject encounters these violations. For example, sentences beginning with phrases such as *the garden was on the worked,[48] which violates an English phrase structure rule, often elicit a brain response called the early left anterior negativity (ELAN).[38] Violation techniques have been in use since at least 1980,[38] when Kutas and Hillyard first reported ERP evidence that semantic violations elicited an N400 effect.[49] Using similar methods, in 1992, Lee Osterhout first reported the P600 response to syntactic anomalies.[50] Violation designs have also been used for hemodynamic studies (fMRI and PET): Embick and colleagues, for example, used grammatical and spelling violations to investigate the location of syntactic processing in the brain using fMRI.[20] Another common use of violation designs is to combine two kinds of violations in the same sentence and thus make predictions about how different language processes interact with one another; this type of crossing-violation study has been used extensively to investigate how syntactic and semantic processes interact while people read or hear sentences.[51][52]

Priming

[edit]In psycholinguistics and neurolinguistics, priming refers to the phenomenon whereby a subject can recognize a word more quickly if he or she has recently been presented with a word that is similar in meaning[53] or morphological makeup (i.e., composed of similar parts).[54] If a subject is presented with a "prime" word such as doctor and then a "target" word such as nurse, if the subject has a faster-than-usual response time to nurse then the experimenter may assume that word nurse in the brain had already been accessed when the word doctor was accessed.[55] Priming is used to investigate a wide variety of questions about how words are stored and retrieved in the brain[54][56] and how structurally complex sentences are processed.[57]

Stimulation

[edit]Transcranial magnetic stimulation (TMS), a new noninvasive[58] technique for studying brain activity, uses powerful magnetic fields that are applied to the brain from outside the head.[59] It is a method of exciting or interrupting brain activity in a specific and controlled location, and thus is able to imitate aphasic symptoms while giving the researcher more control over exactly which parts of the brain will be examined.[59] As such, it is a less invasive alternative to direct cortical stimulation, which can be used for similar types of research but requires that the subject's scalp be removed, and is thus only used on individuals who are already undergoing a major brain operation (such as individuals undergoing surgery for epilepsy).[60] The logic behind TMS and direct cortical stimulation is similar to the logic behind aphasiology: if a particular language function is impaired when a specific region of the brain is knocked out, then that region must be somehow implicated in that language function. Few neurolinguistic studies to date have used TMS;[2] direct cortical stimulation and cortical recording (recording brain activity using electrodes placed directly on the brain) have been used with macaque monkeys to make predictions about the behavior of human brains.[61]

Subject tasks

[edit]In many neurolinguistics experiments, subjects do not simply sit and listen to or watch stimuli, but also are instructed to perform some sort of task in response to the stimuli.[62] Subjects perform these tasks while recordings (electrophysiological or hemodynamic) are being taken, usually in order to ensure that they are paying attention to the stimuli.[63] At least one study has suggested that the task the subject does has an effect on the brain responses and the results of the experiment.[64]

Lexical decision

[edit]The lexical decision task involves subjects seeing or hearing an isolated word and answering whether or not it is a real word. It is frequently used in priming studies, since subjects are known to make a lexical decision more quickly if a word has been primed by a related word (as in "doctor" priming "nurse").[53][54][55]

Grammaticality judgment, acceptability judgment

[edit]Many studies, especially violation-based studies, have subjects make a decision about the "acceptability" (usually grammatical acceptability or semantic acceptability) of stimuli.[64][65][66][67][68] Such a task is often used to "ensure that subjects [are] reading the sentences attentively and that they [distinguish] acceptable from unacceptable sentences in the way the [experimenter] expect[s] them to do."[66]

Experimental evidence has shown that the instructions given to subjects in an acceptability judgment task can influence the subjects' brain responses to stimuli. One experiment showed that when subjects were instructed to judge the "acceptability" of sentences they did not show an N400 brain response (a response commonly associated with semantic processing), but that they did show that response when instructed to ignore grammatical acceptability and only judge whether or not the sentences "made sense".[64]

Probe verification

[edit]Some studies use a "probe verification" task rather than an overt acceptability judgment; in this paradigm, each experimental sentence is followed by a "probe word", and subjects must answer whether or not the probe word had appeared in the sentence.[55][66] This task, like the acceptability judgment task, ensures that subjects are reading or listening attentively, but may avoid some of the additional processing demands of acceptability judgments, and may be used no matter what type of violation is being presented in the study.[55]

Truth-value judgment

[edit]Subjects may be instructed not to judge whether or not the sentence is grammatically acceptable or logical, but whether the proposition expressed by the sentence is true or false. This task is commonly used in psycholinguistic studies of child language.[69][70]

Active distraction and double-task

[edit]Some experiments give subjects a "distractor" task to ensure that subjects are not consciously paying attention to the experimental stimuli; this may be done to test whether a certain computation in the brain is carried out automatically, regardless of whether the subject devotes attentional resources to it. For example, one study had subjects listen to non-linguistic tones (long beeps and buzzes) in one ear and speech in the other ear, and instructed subjects to press a button when they perceived a change in the tone; this supposedly caused subjects not to pay explicit attention to grammatical violations in the speech stimuli. The subjects showed a mismatch response (MMN) anyway, suggesting that the processing of the grammatical errors was happening automatically, regardless of attention[37]—or at least that subjects were unable to consciously separate their attention from the speech stimuli.

Another related form of experiment is the double-task experiment, in which a subject must perform an extra task (such as sequential finger-tapping or articulating nonsense syllables) while responding to linguistic stimuli; this kind of experiment has been used to investigate the use of working memory in language processing.[71]

See also

[edit]Notes

[edit]- ^ Nakai, Y; Jeong, JW; Brown, EC; Rothermel, R; Kojima, K; Kambara, T; Shah, A; Mittal, S; Sood, S; Asano, E (2017). "Three- and four-dimensional mapping of speech and language in patients with epilepsy". Brain. 140 (5): 1351–1370. doi:10.1093/brain/awx051. PMC 5405238. PMID 28334963.

- ^ a b c d e f g h Phillips, Colin; Kuniyoshi L. Sakai (2005). "Language and the brain" (PDF). Yearbook of Science and Technology. McGraw-Hill Publishers. pp. 166–169.

- ^ a b Wiśniewski, Kamil (12 August 2007). "Neurolinguistics". Język angielski online. Archived from the original on 17 April 2016. Retrieved 31 January 2009.

- ^ a b Dronkers, N.F.; O. Plaisant; M.T. Iba-Zizen; E.A. Cabanis (2007). "Paul Broca's historic cases: high resolution MR imaging of the brains of Leborgne and Lelong". Brain. 130 (Pt 5): 1432–3, 1441. doi:10.1093/brain/awm042. PMID 17405763.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ^ a b Teter, Theresa (May 2000). "Pierre-Paul Broca". Muskingum College. Archived from the original on 5 February 2009. Retrieved 25 January 2009.

- ^ "Pierre Paul Broca". Who Named It?. Retrieved 25 January 2009.

- ^ McCaffrey, Patrick (2008). "CMSD 620 Neuroanatomy of Speech, Swallowing and Language". Neuroscience on the Web. California State University, Chico. Retrieved 22 February 2009.

- ^ Garey, Laurence (2006). Brodmann's. ISBN 9780387269177. Retrieved 22 February 2009.

- ^ Peng, F.C.C. (1985). "What is neurolinguistics?". Journal of Neurolinguistics. 1 (1): 7–30. doi:10.1016/S0911-6044(85)80003-8. S2CID 20322583.

- ^ Brown, Colin M.; and Peter Hagoort (1999). "The cognitive neuroscience of language." in Brown & Hagoort, The Neurocognition of Language. p. 6.

- ^ a b Weisler (1999), p. 293.

- ^ Hagoort, Peter (2003). "How the brain solves the binding problem for language: a neurocomputational model of syntactic processing". NeuroImage. 20: S18–29. doi:10.1016/j.neuroimage.2003.09.013. hdl:11858/00-001M-0000-0013-1E0C-2. PMID 14597293. S2CID 18845725.

- ^ a b Hall, Christopher J (2005). An Introduction to Language and Linguistics. Continuum International Publishing Group. p. 274. ISBN 978-0-8264-8734-6.

- ^ a b Hagoort, Peter; Colin M. Brown; Lee Osterhout (1999). "The neurocognition of syntactic processing." in Brown & Hagoort. The Neurocognition of Language. p. 280.

- ^ a b Hagoort, Peter (2003). "How the brain solves the binding problem for language: a neurocomputational model of syntactic processing". NeuroImage. 20: S19–S20. doi:10.1016/j.neuroimage.2003.09.013. hdl:11858/00-001M-0000-0013-1E0C-2. PMID 14597293. S2CID 18845725.

- ^ Pylkkänen, Liina. "What is neurolinguistics?" (PDF). p. 2. Archived from the original (PDF) on 4 March 2016. Retrieved 31 January 2009.

- ^ See, for example, Friederici, Angela D. (2002). "Towards a neural basis of auditory sentence processing". Trends in Cognitive Sciences. 6 (2): 78–84. doi:10.1016/S1364-6613(00)01839-8. hdl:11858/00-001M-0000-0010-E573-8. PMID 15866191., which discusses how three brain responses reflect three stages of Fodor and Frazier's model.

- ^ Weisler (1999), p. 280.

- ^ Hickock, Gregory; David Poeppel (2007). "Opinion: The cortical organization of speech processing". Nature Reviews Neuroscience. 8 (5): 393–402. doi:10.1038/nrn2113. PMID 17431404. S2CID 6199399.

- ^ a b c Embick, David; Alec Marantz; Yasushi Miyashita; Wayne O'Neil; Kuniyoshi L. Sakai (2000). "A syntactic specialization for Broca's area". Proceedings of the National Academy of Sciences. 97 (11): 6150–6154. Bibcode:2000PNAS...97.6150E. doi:10.1073/pnas.100098897. PMC 18573. PMID 10811887.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ^ Brown, Colin M.; and Peter Hagoort (1999). "The cognitive neuroscience of language." in Brown & Hagoort. The Neurocognition of Language. p. 7.

- ^ a b Wang Yue; Joan A. Sereno; Allard Jongman; and Joy Hirsch (2003). "fMRI evidence for cortical modification during learning of Mandarin lexical tone" (PDF). Journal of Cognitive Neuroscience. 15 (7): 1019–1027. doi:10.1162/089892903770007407. hdl:1808/12458. PMID 14614812. S2CID 4812588.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ^ a b c Menn, Lise. "Neurolinguistics". Linguistic Society of America. Archived from the original on 11 December 2008. Retrieved 18 December 2008.

- ^ "The Bilingual Brain". Brain Briefings. Society for Neuroscience. February 2008. Archived from the original on 25 July 2010. Retrieved 1 February 2009.

- ^ a b Friederici, Angela D. (2002). "Towards a neural basis of auditory sentence processing". Trends in Cognitive Sciences. 6 (2): 78–84. doi:10.1016/S1364-6613(00)01839-8. hdl:11858/00-001M-0000-0010-E573-8. PMID 15866191.

- ^ Caplan (1987), p. 11.

- ^ a b Caplan (1987), p. 12.

- ^ a b Sereno, Joan A; Yue Wang (2007). "Behavioral and cortical effects of learning a second language: The acquisition of tone". In Ocke-Schwen Bohn; Murray J. Munro (eds.). Language Experience in Second Language Speech Learning. Philadelphia: John Benjamins Publishing Company. ISBN 978-9027219732.

- ^ Ping Li, Jennifer Legault, Kaitlyn A. Litcofsky, May 2014. Neuroplasticity as a function of second language learning: Anatomical changes in the human brain Cortex: A Journal Devoted to the Study of the Nervous System & Behavior, 410.1016/j.cortex.2014.05.00124996640

- ^ Ward, Jamie (2006). "The imaged brain". The Student's Guide to Cognitive Neuroscience. Psychology Press. ISBN 978-1-84169-534-1.

- ^ a b c d Kutas, Marta; Kara D. Federmeier (2002). "Electrophysiology reveals memory use in language comprehension". Trends in Cognitive Sciences. 4 (12).

- ^ Filler AG, Tsuruda JS, Richards TL, Howe FA: Images, apparatus, algorithms and methods. GB 9216383, UK Patent Office, 1992.

- ^ Ansaldo, Ana Inés; Kahlaoui, Karima; Joanette, Yves (2011). "Functional near-infrared spectroscopy: Looking at the brain and language mystery from a different angle". Brain and Language. 121 (2, number 2): 77–8. doi:10.1016/j.bandl.2012.03.001. PMID 22445199. S2CID 205792249.

- ^ a b Pylkkänen, Liina; Alec Marantz (2003). "Tracking the time course of word recognition with MEG". Trends in Cognitive Sciences. 7 (5): 187–189. doi:10.1016/S1364-6613(03)00092-5. PMID 12757816. S2CID 18214558.

- ^ Van Petten, Cyma; Luka, Barbara (2006). "Neural localization of semantic context effects in electromagnetic and hemodynamic studies". Brain and Language. 97 (3): 279–93. doi:10.1016/j.bandl.2005.11.003. PMID 16343606. S2CID 46181.

- ^ Coles, Michael G.H.; Michael D. Rugg (1996). "Event-related brain potentials: an introduction" (PDF). Electrophysiology of Mind. Oxford Scholarship Online Monographs. pp. 1–27. ISBN 978-0-19-852135-8.

- ^ a b c d Pulvermüller, Friedemann; Yury Shtyrov; Anna S. Hasting; Robert P. Carlyon (2008). "Syntax as a reflex: neurophysiological evidence for the early automaticity of syntactic processing". Brain and Language. 104 (3): 244–253. doi:10.1016/j.bandl.2007.05.002. PMID 17624417. S2CID 13870754.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ^ a b c Frisch, Stefan; Anja Hahne; Angela D. Friederici (2004). "Word category and verb–argument structure information in the dynamics of parsing". Cognition. 91 (3): 191–219 [194]. doi:10.1016/j.cognition.2003.09.009. PMID 15168895. S2CID 44889189.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ^ Kaan, Edith; Swaab, Tamara (2003). "Repair, revision, and complexity in syntactic analysis: an electrophysiological differentiation". Journal of Cognitive Neuroscience. 15 (1): 98–110. doi:10.1162/089892903321107855. PMID 12590846. S2CID 14934107.

- ^ van Turrenout, Miranda; Hagoort, Peter; Brown, Colin M (1998). "Brain activity during speaking: from syntax to phonology in 40 milliseconds". Science. 280 (5363): 572–4. Bibcode:1998Sci...280..572V. doi:10.1126/science.280.5363.572. hdl:21.11116/0000-0002-C13A-3. PMID 9554845.

- ^ Grabowski, T., and Damasio, A." (2000). Investigating language with functional neuroimaging. San Diego, CA, US: Academic Press. 14, 425-461.

- ^ a b Pulvermüller, Friedemann; Yury Shtyrov (2003). "Automatic processing of grammar in the human brain as revealed by the mismatch negativity". NeuroImage. 20 (1): 159–172. doi:10.1016/S1053-8119(03)00261-1. PMID 14527578. S2CID 27124567.

- ^ a b Phillips, Colin; T. Pellathy; A. Marantz; E. Yellin; K. Wexler; M. McGinnis; D. Poeppel; T. Roberts (2001). "Auditory cortex accesses phonological category: an MEG mismatch study". Journal of Cognitive Neuroscience. 12 (6): 1038–1055. CiteSeerX 10.1.1.201.5797. doi:10.1162/08989290051137567. PMID 11177423. S2CID 8686819.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ^ Shtyrov, Yury; Olaf Hauk; Friedmann Pulvermüller (2004). "Distributed neuronal networks for encoding category-specific semantic information: the mismatch negativity to action words". European Journal of Neuroscience. 19 (4): 1083–1092. doi:10.1111/j.0953-816X.2004.03126.x. PMID 15009156. S2CID 27238979.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ^ Näätänen, Risto; Lehtokoski, Anne; Lennes, Mietta; Cheour, Marie; Huotilainen, Minna; Iivonen, Antti; Vainio, Martti; Alku, Paavo; et al. (1997). "Language-specific phoneme representations revealed by electric and magnetic brain responses". Nature. 385 (6615): 432–434. Bibcode:1997Natur.385..432N. doi:10.1038/385432a0. PMID 9009189. S2CID 4366960.

- ^ Kazanina, Nina; Colin Phillips; William Idsardi (2006). "The influence of meaning on the perception of speech sounds". Proceedings of the National Academy of Sciences of the United States of America. 103 (30): 11381–11386. Bibcode:2006PNAS..10311381K. doi:10.1073/pnas.0604821103. PMC 3020137. PMID 16849423.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ^ Hasing, Anna S.; Sonja A. Kotz; Angela D. Friederici (2007). "Setting the stage for automatic syntax processing: the mismatch negativity as an indicator of syntactic priming". Journal of Cognitive Neuroscience. 19 (3): 386–400. doi:10.1162/jocn.2007.19.3.386. PMID 17335388. S2CID 3046335.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ^ Example from Frisch et al. (2004: 195).

- ^ Kutas, M.; S.A. Hillyard (1980). "Reading senseless sentences: brain potentials reflect semantic incongruity". Science. 207 (4427): 203–205. Bibcode:1980Sci...207..203K. doi:10.1126/science.7350657. PMID 7350657.

- ^ Osterhout, Lee; Phillip J. Holcomb (1992). "Event-related Potentials Elicited by Grammatical Anomalies". Psychophysiological Brain Research: 299–302.

- ^ Martín-Loeches, Manuel; Roland Nigbura; Pilar Casadoa; Annette Hohlfeldc; Werner Sommer (2006). "Semantics prevalence over syntax during sentence processing: a brain potential study of noun–adjective agreement in Spanish". Brain Research. 1093 (1): 178–189. doi:10.1016/j.brainres.2006.03.094. PMID 16678138. S2CID 1188462.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ^ Frisch, Stefan; Anja Hahne; Angela D. Friederici (2004). "Word category and verb–argument structure information in the dynamics of parsing". Cognition. 91 (3): 191–219 [195]. doi:10.1016/j.cognition.2003.09.009. PMID 15168895. S2CID 44889189.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ^ a b "Experiment Description: Lexical Decision and Semantic Priming". Athatbasca University. 27 June 2005. Archived from the original on 8 December 2009. Retrieved 14 December 2008.

- ^ a b c Fiorentino, Robert; David Poeppel (2007). "Processing of compound words: an MEG study". Brain and Language. 103 (1–2): 8–249. doi:10.1016/j.bandl.2007.07.009. S2CID 54431968.

- ^ a b c d Friederici, Angela D.; Karsten Steinhauer; Stefan Frisch (1999). "Lexical integration: sequential effects of syntactic and semantic information". Memory & Cognition. 27 (3): 438–453. doi:10.3758/BF03211539. PMID 10355234.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ^ Devlin, Joseph T.; Helen L. Jamison; Paul M. Matthews; Laura M. Gonnerman (2004). "Morphology and the internal structure of words". Proceedings of the National Academy of Sciences. 101 (41): 14984–14988. doi:10.1073/pnas.0403766101. PMC 522020. PMID 15358857.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ^ Zurif, E.B.; D. Swinney; P. Prather; J. Solomon; C. Bushell (1993). "An on-line analysis of syntactic processing in Broca's and Wernicke's aphasia". Brain and Language. 45 (3): 448–464. doi:10.1006/brln.1993.1054. PMID 8269334. S2CID 8791285.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ^ "Transcranial Magnetic Stimulation - Risks". Mayo Clinic. Retrieved 15 December 2008.

- ^ a b "Transcranial Magnetic Stimulation (TMS)". National Alliance on Mental Illness. Archived from the original on 8 January 2009. Retrieved 15 December 2008.

- ^ A.R. Wyler; A.A. Ward, Jr (1981). "Neurons in human epileptic cortex. Response to direct cortical stimulation". Journal of Neurosurgery. 55 (6): 904–8. doi:10.3171/jns.1981.55.6.0904. PMID 7299464.

- ^ Hagoort, Peter (2005). "On Broca, brain, and binding: a new framework". Trends in Cognitive Sciences. 9 (9): 416–23. doi:10.1016/j.tics.2005.07.004. hdl:11858/00-001M-0000-0013-1E16-A. PMID 16054419. S2CID 2826729.

- ^ One common exception to this is studies using the mismatch paradigm, in which subjects are often instructed to watch a silent movie or otherwise not pay attention actively to the stimuli. See, for example:

- Pulvermüller, Friedemann; Ramin Assadollahi (2007). "Grammar or serial order?: discrete combinatorial brain mechanicsms reflected by the syntactic mismatch negativity". Journal of Cognitive Neuroscience. 19 (6): 971–980. doi:10.1162/jocn.2007.19.6.971. PMID 17536967. S2CID 6682016.

- Pulvermüller, Friedemann; Yury Shtyrov (2003). "Automatic processing of grammar in the human brain as revealed by the mismatch negativity". NeuroImage. 20 (1): 159–172. doi:10.1016/S1053-8119(03)00261-1. PMID 14527578. S2CID 27124567.

- ^ Van Petten, Cyma (1993). "A comparison of lexical and sentence-level context effects in event-related potentials". Language and Cognitive Processes. 8 (4): 490–91. doi:10.1080/01690969308407586.

- ^ a b c Hahne, Anja; Angela D. Friederici (2002). "Differential task effects on semantic and syntactic processes as revealed by ERPs". Cognitive Brain Research. 13 (3): 339–356. doi:10.1016/S0926-6410(01)00127-6. hdl:11858/00-001M-0000-0010-ABA4-1. PMID 11918999.

- ^ Zheng Ye; Yue-jia Luo; Angela D. Friederici; Xiaolin Zhou (2006). "Semantic and syntactic processing in Chinese sentence comprehension: evidence from event-related potentials". Brain Research. 1071 (1): 186–196. doi:10.1016/j.brainres.2005.11.085. PMID 16412999. S2CID 18324338.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ^ a b c Frisch, Stefan; Anja Hahne; Angela D. Friederici (2004). "Word category and verb–argument structure information in the dynamics of parsing". Cognition. 91 (3): 200–201. doi:10.1016/j.cognition.2003.09.009. PMID 15168895. S2CID 44889189.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ^ Osterhout, Lee (1997). "On the brain response to syntactic anomalies: manipulations of word position and word class reveal individual differences". Brain and Language. 59 (3): 494–522 [500]. doi:10.1006/brln.1997.1793. PMID 9299074. S2CID 14354089.

- ^ Hagoort, Peter (2003). "Interplay between syntax and semantics during sentence comprehension: ERP effects of combining syntactic and semantic violations". Journal of Cognitive Neuroscience. 15 (6): 883–899. CiteSeerX 10.1.1.70.9046. doi:10.1162/089892903322370807. PMID 14511541. S2CID 15814199.

- ^ Gordon, Peter. "The Truth-Value Judgment Task" (PDF). In D. McDaniel; C. McKee; H. Cairns (eds.). Methods for assessing children's syntax. Cambridge: MIT Press. p. 1. Archived from the original (PDF) on 9 June 2010. Retrieved 14 December 2008.

- ^ Crain, Stephen, Luisa Meroni, and Utako Minai. "If Everybody Knows, then Every Child Knows." University of Maryland at College Park. Retrieved 14 December 2008.

- ^ Rogalsky, Corianne; William Matchin; Gregory Hickok (2008). "Broca's Area, Sentence Comprehension, and Working Memory: An fMRI Study". Frontiers in Human Neuroscience. 2: 14. doi:10.3389/neuro.09.014.2008. PMC 2572210. PMID 18958214.

{{cite journal}}: CS1 maint: multiple names: authors list (link)

References

[edit]- Colin M. Brown; Peter Hagoort, eds. (1999). The Neurocognition of Language. New York: Oxford University Press.

- Caplan, David (1987). Neurolinguistics and Linguistic Aphasiology: An Introduction. Cambridge University Press. pp. 498. ISBN 978-0-521-31195-3.

- Ingram, John C.L. (2007). Neurolinguistics: An Introduction to Spoken Language Processing and Its Disorders. Cambridge University Press. p. 420. ISBN 978-0-521-79190-8.

- Weisler, Stephen; Slavoljub P. Milekic (1999). "Brain and Language". Theory of Language. MIT Press. p. 344. ISBN 978-0-262-73125-6.

Further reading

[edit]- Ahlsén, Elisabeth (2006). Introduction to Neurolinguistics. John Benjamins Publishing Company. p. 212. ISBN 978-90-272-3233-5.

- Moro, Andrea (2008). The Boundaries of Babel. The Brain and the Enigma of Impossible Languages. MIT Press. p. 257. ISBN 978-0-262-13498-9. Archived from the original on 7 October 2012. Retrieved 18 September 2009.

- Stemmer, Brigitte; Harry A. Whitaker (1998). Handbook of Neurolinguistics. Academic Press. p. 788. ISBN 978-0-12-666055-5.

Some relevant journals include the Journal of Neurolinguistics and Brain and Language. Both are subscription access journals, though some abstracts may be generally available.

External links

[edit]- Society for Neuroscience (SfN)

- Neurolinguistics Resources from the LSA

- Talking Brains, blog by neurolinguists Greg Hickock and David Poeppel